Pretty soon we will all be connected to the internet all the time. Satellites will fill in the last remaining dead zones. Cheap ubiquitous connectivity is going to break many assumptions of the software industry. Afterwards it will appear to have been inevitable.

Continue reading “Ubiquitous connectivity and duplex applications”Technical Dimensions of Programming Systems

I forgot to mention that I coauthored a paper with Joel Jakubovic, a grad student of my collaborator Tomas Petricek, published in The Programming Journal. We got the Editors’ Choice award. Tomas made a nifty website about it.

Abstract

Programming requires much more than just writing code in a programming language. It is usually done in the context of a stateful environment, by interacting with a system through a graphical user interface. Yet, this wide space of possibilities lacks a common structure for navigation. Work on programming systems fails to form a coherent body of research, making it hard to improve on past work and advance the state of the art.

In computer science, much has been said and done to allow comparison of programming languages, yet no similar theory exists for programming systems; we believe that programming systems deserve a theory too.

We present a framework of technical dimensions which capture the underlying characteristics of programming systems and provide a means for conceptualizing and comparing them.

We identify technical dimensions by examining past influential programming systems and reviewing their design principles, technical capabilities, and styles of user interaction. Technical dimensions capture characteristics that may be studied, compared and advanced independently. This makes it possible to talk about programming systems in a way that can be shared and constructively debated rather than relying solely on personal impressions.

Our framework is derived using a qualitative analysis of past programming systems. We outline two concrete ways of using our framework. First, we show how it can analyze a recently developed novel programming system. Then, we use it to identify an interesting unexplored point in the design space of programming systems.

Much research effort focuses on building programming systems that are easier to use, accessible to non-experts, moldable and/or powerful, but such efforts are disconnected. They are informal, guided by the personal vision of their authors and thus are only evaluable and comparable on the basis of individual experience using them. By providing foundations for more systematic research, we can help programming systems researchers to stand, at last, on the shoulders of giants.

Frieda

Excerpted from: Future of end-user software engineering: beyond the silos [PDF].

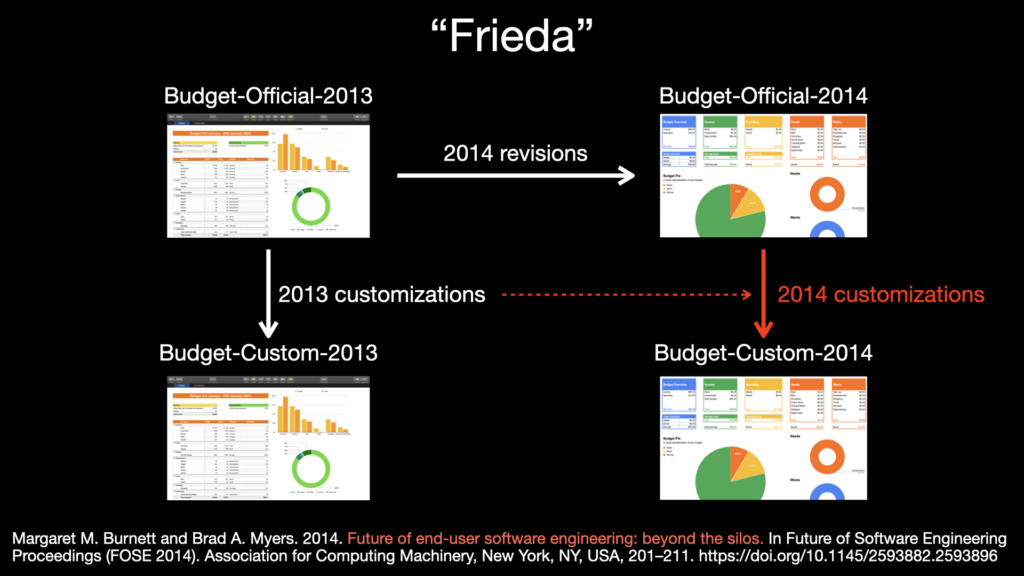

For example, consider “Frieda“, an office manager in charge of her department’s budget tracking. (Frieda was a participant in a set of interviews with spreadsheet users that the first author conducted. Frieda is not her real name.) Every year, the company she works for produces an updated budget tracking spreadsheet with the newest reporting requirements embedded in its structure and formulas. But this spreadsheet is not a perfect fit to the kinds of projects and sub-budgets she manages, so every year Frieda needs to change it. She does this by working with four variants of the spreadsheet at once: the one the company sent out last year (we will call it Official-lastYear), the one she derived from that one to fit her department’s needs (Dept-lastYear), the one the company sent out this year (Official-thisYear), and the one she is trying to put together for this year (Dept-thisYear).

Using these four variants, Frieda exploratively mixes reverse engineering, reuse, programming, testing, and debugging, mostly by trial-and-error. She begins this process by reminding herself of ways she changed last year’s by reverse engineering a few of the differences between Official-lastYear and Dept-lastYear. She then looks at the same portions of Official-thisYear to see if those same changes can easily be made, given her department’s current needs.

She can reuse some of these same changes this year, but copying them into Dept-thisYear is troublesome, with some of the formulas automatically adjusting themselves to refer to Dept-lastYear. She patches these up (if she notices them), then tries out some new columns or sections of Dept-thisYear to reflect her new projects. She mixes in “testing” along the way by entering some of the budget values for this year and eyeballing the values that come out, then debugs if she notices something amiss. At some point, she moves on to another set of related columns, repeating the cycle for these. Frieda has learned over the years to save some of her spreadsheet variants along the way (using a different filename for each), because she might decide that the way she did some of her changes was a bad idea, and she wants to revert to try a different way she had started before.

The Philosophy of Copy and Paste

I have a new paper out with Tomas Petricek: Interaction vs. Abstraction: Managed Copy and Paste, to appear at PAINT’22. [Demo video] I have mixed feelings about this work.

I’ve been talking about the idea ever since my first Subtext paper, and tried to build it several times, but hit many difficulties. This new theory of structure editing I’ve been working on seemed to make it possible. So I had to try it.

The idea is philosophically tantalizing. There is a long-running intellectual debate between those who believe in Logic and Formal Methods as an account of language/cognition/programming and those who reject those accounts as shallow and inadequate. Wittgenstein famously took both sides. I believe programming offers us for the first time a way to substantiate the anti-logic position with a constructive theory that is more than just counter-examples and anecdotes. Managed Copy and Paste is my primary candidate for an Informal Method that takes on the Formal Methods.

Functional abstraction is considered to be the essence of programming languages, enshrined in the holy Lambda Calculus. A key benefit of functions is to centralize change. But if we can track copies and propagate changes between them then having a centralized abstraction is more of an ideal end-state than a necessary condition. The key change of perspective is to move from a program as a static crystalline abstraction to programming as an interactive process of continual adaptation. Managed Copy and Paste subverts functional abstraction and could actually be more ergonomic in practice.

The good news is that it works, and my editing theory handles tricky cases like copying copies. Unfortunately I’m not sure it turns out to actually be more ergonomic. At the very least it needs a lot more UI work. At the moment I’m afraid it is isn’t a slam dunk win, and it needs to be a slam dunk to get anyone to seriously consider such a radical change. Bottom line, I love the idea and its philosophical implications, but in practice it may be more of a luxury than a must-have. A vitamin, not a pain killer.

But what do I know? Tomas convinced me to write this paper to at least put the idea out there and see what happens. What do you think?

Why no-code is uninteresting

in one tweet. Distilled a lot of thought into this one, so lifting it up here.